Biological Bombs: An explainer of AI-tools for designing pathogens

Advances in AI will augur a wave of novel bioweapons, opening the door for lone-wolf and non-state bioterrorism.

Written by Tom Campbell and Dirk van der Kley

Key Points

LLMs make it easier to use existing pathogens as a bioweapon.

AI-powered Biological Design Tools (BDTs) facilitate creation of novel pandemic-class pathogens. An engineered pathogen has the potential kills tens of millions.

Current regulation and screening of synthetic DNA customers and orders is not sufficient to deal with this threat

A coordinated (and enforced) international agreement on AI-tools and synthetic DNA is required

Article

March of this year will mark the 30-year anniversary of Aum Shinrikyo’s Tokyo Subway Sarin Incident, a terrifying series of attacks that killed 13 people and injured more than 5,800. The cult movement used the nerve agent sarin, after initially planning to cause an anthrax epidemic. The group’s lead scientist Seichii Endo was a PhD virologist but still the group failed in their efforts to cause widespread death due to a lack of understanding of the bacterium.

What could Endo have done with access to AI?

AI-related biosecurity concerns are increasingly pressing. Designed pathogens that are pandemic class agents would be more lethal than nuclear weapons, but far more accessible to lone-wolf actors or terrorist groups. Addressing the Senate, CEO of Anthropic (the startup behind the chatbot Claude), Dario Amodei, claimed that without regulation, AI has the potential to “greatly widen the range of actors with the technical capability to conduct a large-scale biological attack.” The rate of improvement in AI models means that this risk is not years away, but only months.

There are few threats of the same magnitude. We have recent reminder of what a new biological pathogen can do - global excess deaths during COVID were in the tens of millions. AI could potentially create much worse pathogens.

Nations need to act.

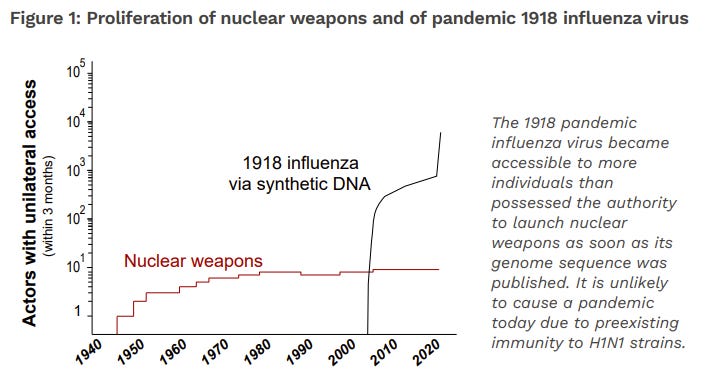

Source: Kevin M. Esvelt, Geneva Paper

A lack of coordinated regulation of synthetic DNA companies only exacerbates this risk, making it easier for rogue actors to get hold of dangerous pathogens. International agreement on regulation of AI tools and synthetic DNA screening is a necessary preventative step.

AI tools for bioweapons

In discussions of biosecurity, it is helpful to delineate between Large Language Models (LLMs) and more specific Biological Design Tools (BDTs). These technologies are trained on separate data and thus pose unique risks.

Source: Jonas B. Sandbrink, preprint

Large Language Models

LLMs are AI models trained on natural language data, with the aim of predicting and producing information in human-language form. These engines underpin chatbots such as ChatGPT, Claude, and Gemini.

AI chatbots can aid bioterrorism efforts by helping actors overcome knowledge gaps. In one study, non-science students were tasked with engineering a pandemic and given access to chatbots for an hour. The chatbot directed them towards four potential pandemic pathogens, gave suggestions as to which edits could be made to the genome to improve transmissibility, and even provided a list of DNA synthesis companies that are unlikely to screen orders.

Moreover, chatbots are prone to ‘jail-breaking’ prompt injection attacks that allow users to bypass built-in restrictions (for readers curious as to how easy this is I recommend trying Lakera’s cybersecurity puzzle).

In this situation, the LLM user still needs to reassemble the synthetic DNA fragments, but the lab spaces and necessary reagents are estimated (pg. 26 of this report) to be available for less than US$50,000 (and dropping rapidly). Moreover, specialised knowledge is unlikely to be a significant barrier; standard protocols (i.e. for Golden Gate assembly) are readily available online.

Further, we may soon be in a world where “benchtop” DNA synthesisers are common - this would entail a future where an individual with access to even a low-sophistication lab can create organisms from genetic sequences.

Currently, the scope of LLMs is such that they are not making any new threat but may increase accessibility and allow an untrained individual to navigate a public database more quickly. This is captured in OpenAI’s own testing of GPT-4, which reported that access to the LLM (compared to Internet access alone) improved participants’ ability to accurately detail the steps needed to create a biological threat.

However, LLMs are improving, decreasing the chance of hallucinations (false or misleading information), and thereby making them a better tool for scientific questions. When tested against the GPQA (Graduate-Level Google-Proof Q&A benchmark) dataset of PhD level science questions, the newer OpenAI o1 model exceeded human performance.

Importantly, the perception that AI lowers barriers to entry is meaningful on its own, as new groups of actors who previously assumed that bioweapons were out of their reach can now access genome datasets, experimental protocols, and information about synthetic DNA far more readily.

Biological Design Tools

BDTs are of more serious concern. This class of AI refers to software or models that are specifically trained on biological data such as DNA or amino acid sequences (such as AlphaFold, RFDiffusion or RosettaCommons). The ultimate goal of these models is to link genetic sequence to biological function, thereby facilitating de novo design of sequences with desired functionalities.

However, the risk of these models is that biological research is inherently dual-use, with processes used for medical advances also usable for bioweapons. As one example published in Nature, Collaborations Pharmaceutical inverted an AI model trained to assess toxicity of pharmaceuticals, and within 6 hours had generated a library of 40,000 molecules, many of which were more toxic than nerve agent VX, a potent chemical warfare agent.

As these tools advance biological design, there is the risk that the ceiling of harm for biological misuse will increase. It has been hypothesised that naturally occurring pathogens face a trade-off between transmissibility and virulence. BDTs could enable agents to overcome this, and subsequently optimise a pathogen across both conditions.

BDTs have the potential to contribute to outbreaks of new pathogens in several ways:

Optimising and designing new virus subtypes that can evade immunity: AI models can generate viable designs for subtypes that can escape human immunity (e.g. for SARS-CoV-2)

Designing characteristics to improve transmissibility: AI developers are working on models that can design persisting genetic changes

Completing protocols for the de novo synthesis of pathogens: AI systems could be used to automate lab work using CROs to improve scalability and facilitate large screening of potential pathogens

Designing genes, genetic pathways or proteins that convert non-human animal pathogens into human pathogens: most infectious diseases in humans arise from non-human animals. AI could predict the characteristics likely to promote this transferral.

Designing genes, genetic pathways or proteins to selectively harm certain populations: by integrating human genomic data with pathogen data, AI might be able to discern whether a population is more or less susceptible to a pathogen

Zero-shot design of pathogenic agents: barriers to misuse will be lowered if BDTs become capable of generating zero-shot designs that don’t require lab validation

As MIT Professor Dr. Kevin Esvelt wrote in his report for the Geneva Centre for Security Policy, a particular threat would come from pandemic-level pathogens we have not previously encountered, and for which we have little research about. As soon as the complete genome sequence is shared, BDTs can be harnessed to generate multiple infectious samples. If this occurs, Esvelt writes (pg. 11), then an individual could “ignite more pandemics simultaneously than would naturally occur in a century.”

The Need for Synthetic DNA Regulation

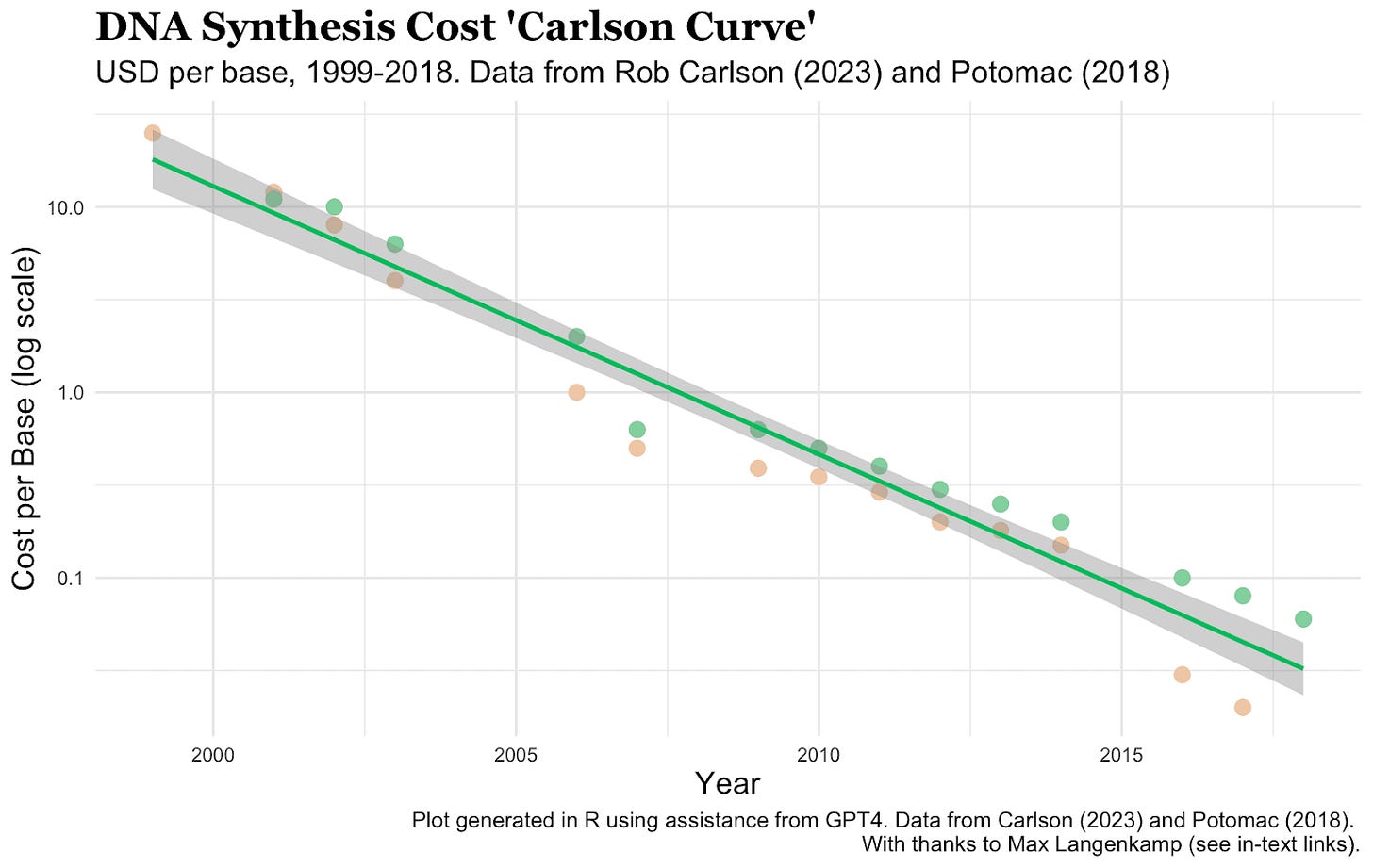

One area where the risk can be mitigated is the step from a computationally designed pathogen to a synthesised DNA sequence. All biological engineering requires custom DNA synthesis, and this has become far more accessible, with prices significantly decreasing over the past two decades.

Source: Copied from Founder Pledge, International Biosecurity and Biosafety Initiative for Science

However, commercial DNA synthesis desperately needs regulation; there is no legal requirement for providers to screen their customers or their orders to ensure that the requested DNA or RNA constructs are not used for harmful purposes. The International Gene Synthesis Consortium (IGSC) requires member companies to adhere to a Harmonized Screening Protocol, but this has not been updated since 2017.

A lack of a universally accepted standard for screening orders means that current systems are not well-equipped and can be easily evaded. In an FBI overseen experiment, researchers from MIT split up the genome of the 1918 pandemic influenza virus into DNA fragments and added camouflaging sequences. These ordered fragments could then easily be reassembled in the lab into a hazardous construct. They concluded that nearly all DNA synthesis screening practices failed to reject even lightly disguised orders.

Regulation of commercial DNA providers alone is not enough. Next-generation benchtop DNA synthesisers will decentralise the synthetic DNA market and if the screening criteria is built-in, then actors could jailbreak the device to bypass security concerns. Thus, regulation should ensure that benchtop synthesisers require a network connection, allowing an external body to regularly update screening systems to account for newly identified risks.

Going beyond this, an international organisation to oversee DNA synthesis companies and to regularly evaluate their screening protocols through red team experiments will help to mitigate risks.

This needs global collaboration - which is in short supply

International cooperation is needed to mitigate biological risks associated with AI, but at present there is no advanced planning that distinguishes between LLMs and BDTs.

Fortunately, the seeds of cooperation have already been planted. In November of 2023, the UK hosted the first AI Safety Summit, with a major takeaway being that AI advances are contributing to increased biological risks. The resulting Bletchley Declaration, is a move in the right direction, with notable signees including the US, India, China and the EU, indicating a collective recognition that biosafety risks are bigger than the current state of geopolitics.

Around the same time, the Biden administration signed Executive Order 14110 which actionned the US to develop standards and implement actions to ‘protect against the risks of using AI to engineer dangerous biological materials’. This requires companies to notify the government prior to releasing models trained on biological sequence data.

However, Trump has already committed to dismantling this.

Trust issues with China will remain. Claims of a lab leak of the virus responsible for COVID-19 endured for several years - it was only last month that the virologist at the heart of the accusations came forward with evidence to dismiss this. Transparency over AI will become another salient issue; while China has already implemented national regulations on AI, critics have dismissed these as unimportant given the power of the CCP to disregard their own rules.

A second difficulty is that most countries simply lack the monitoring capabilities required to place import controls on synthetic DNA orders, or for early detection of lab leaks. This further emphasises the need for centralised regulation - this can be supported by services like SecureDNA to ensure biosafety without compromising on data confidentiality.

It remains to be seen whether early agreements for cooperation are meaningful in implementation, but a unified direction will go some way to mitigating future risks.